Introduction¶

I started my career as a TEM engineer in hardware industry. At the begining, 80% of my working hours are devoted to data collection in the dark, cold TEM room. With the development of modern TEM tools, the data acquisition time is greatly reduced to only 10% of time due to several reasons:

1. The tools are build much more stable thus the need for time consuming alignment is eliminated.

2. Pre-customized auto functions to perform combo operations by a single click.

3. The recipe editor that enable running recipes to control the sample stage, beam, input variables and output files.

The 3rd item is what I'd like to expand into details today. Although technically, the recipe editor allows users to run data acquisition fully automated without supervision thanks to the development of computer vision, under a research environment, I found it more ideal to create recipes that are semi=automatic. Such recipes can take advantage of both fast machine controls and quick judgement from experienced engineers.

Recipe Overview¶

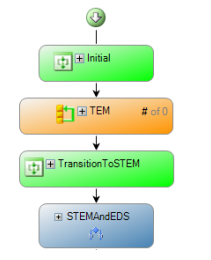

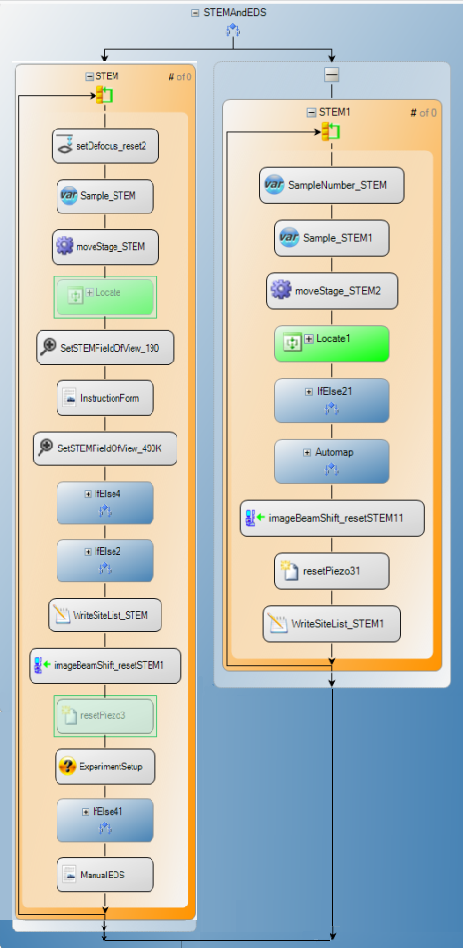

The recipe is divided into 4 major block:

- The #1 block is to initialize the job and import variables.

- The #2 block is for TEM imaging.

- The #3 block is for transitioning into STEM mode.

- The #4 block is for STEM imaging and EDX mapping.

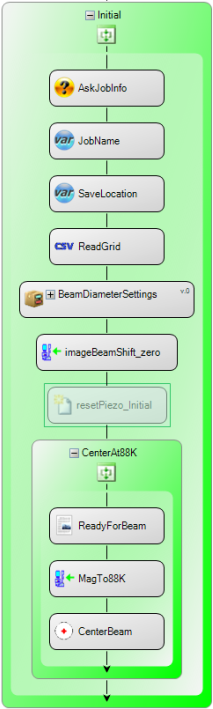

The intial block¶

Before running the recipe, I need to have a .csv file ready with a single column of all sample names of the loaded grid. The recipe will ask for the jobID, number of samples to run and which .csv file to load. The jobID will be used to set the working directory. The number of samples is needed to figure out how many iterations to run when taking results.

The 2nd part of the initial block is to reset the tool to ensure a zero offset start.

Although the Recipe Editor has the capability to locate each samples from low mag mode, accurate search of sample coordinate with correct focus and tilt takes too long to optimize automatically. Instead, I save all sample locations at this step in the stage nativagation panel with rough accuracy and manually locate features on each sample later.

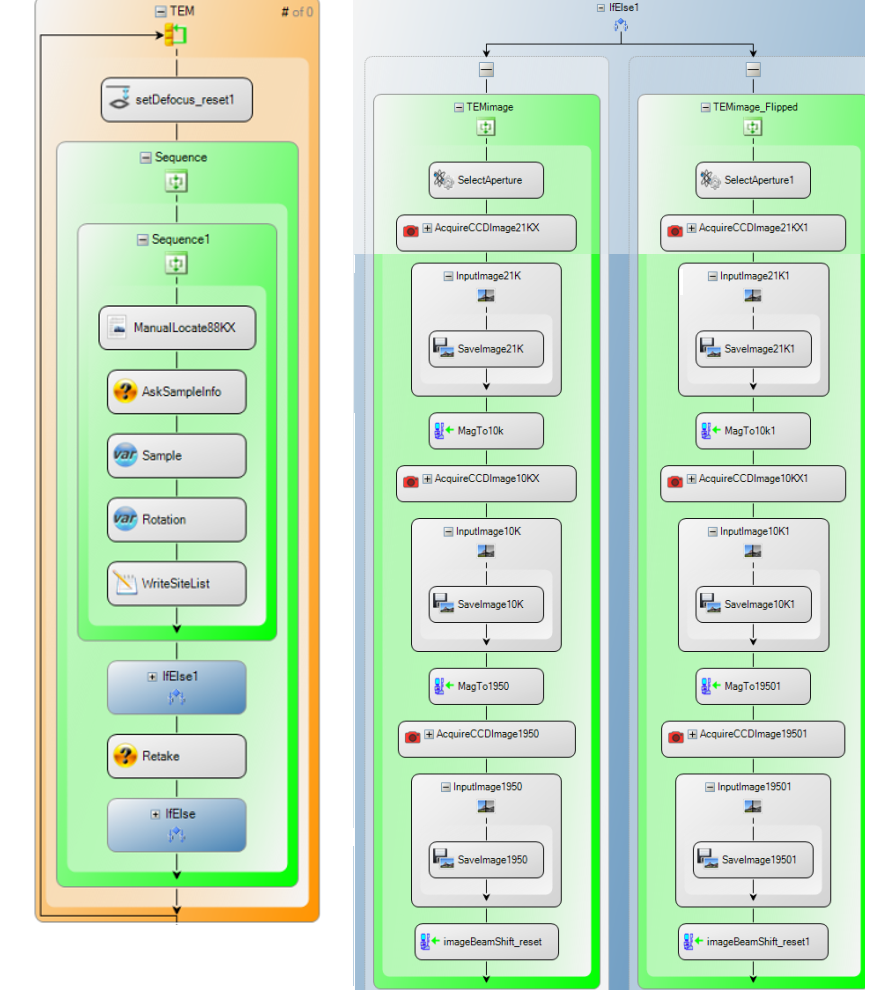

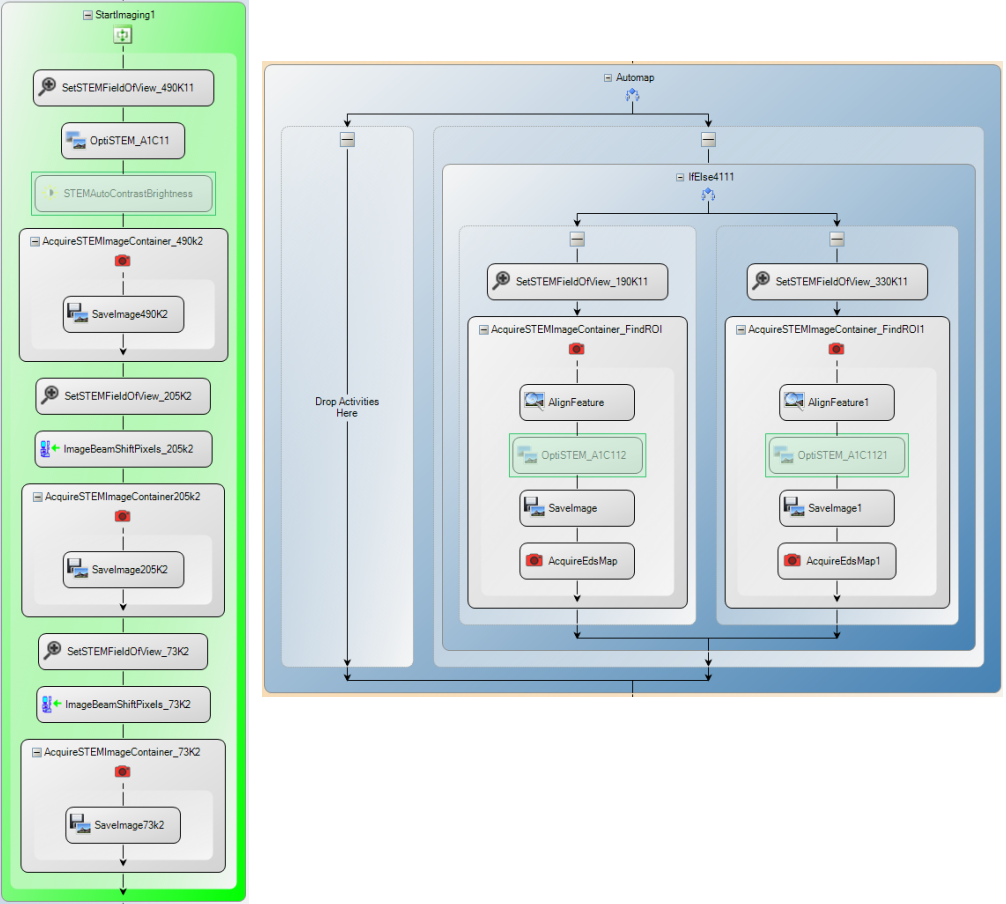

The TEM imaging block¶

This block itself is an iteration through all samples on the grid. For each sample, reset the defocus, manually find the sample feature at highest imaging magnification and start taking the whole set of images. The first ifelse section is used to take images at differnt magnifications, and how to shift the beam for each image is determined by whether the sample has been placed upside down or not. The second ifelse section is used to take additionally images if necessary.

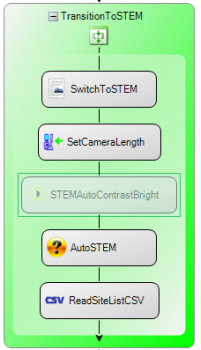

The transition block¶

This section mainly prompts the user to load STEM registration file and automatically check if Camera length and constrast are consistent. In the previous section, the accurate coordinates:(x, y, z, Alpha, Beta) are saved in a new .csv file, so we will be able to image each sample in STEM mode faster.

The STEM block¶

STEM block is used to go through all samples on the grid one more time in the STEM mode. We can take dark field images and add EDS maps. The ifelse condition is to ask whether to run this section with full automation or still under supervision. Becasue sample tilt has been optimized in the previous TEM section, so locating feature though pattern recognization is workable here. However, any operation involves fine features and auto pattern recognization has to go through a casacade of magnifications, so most of the time, manual intervention is adopted. Similar to taking TEM images, taking STEM images also consists of reset beam shift, change magnification, acquire image and save image. Automap can also be used if the user decided to acquire maps automatically when the samples and the training image are alike.

Conclusion¶

With the popularity of remote office and flexible working schedule, this technology enables TEM engineers and scholars to have more versatile work style, save previous brain power for more challenging work and minimize idle tool times. From this article, we learned that the actual implementation doesn't have to be completely automatic. Imperical experient taught me that full auto runs take longer time to run, and are susceptible to error interuption and poor data quality. Alternatively, the combination of human intevention and automation could be quite efficient, especially in a research environment when training images could easily obsolete. I would like to encourgage more and more TEM engineers to try such recipe techniques, embrace the beauty of "smart" working, and spend more time into other frontier subjects such as computer vision and deep learning.